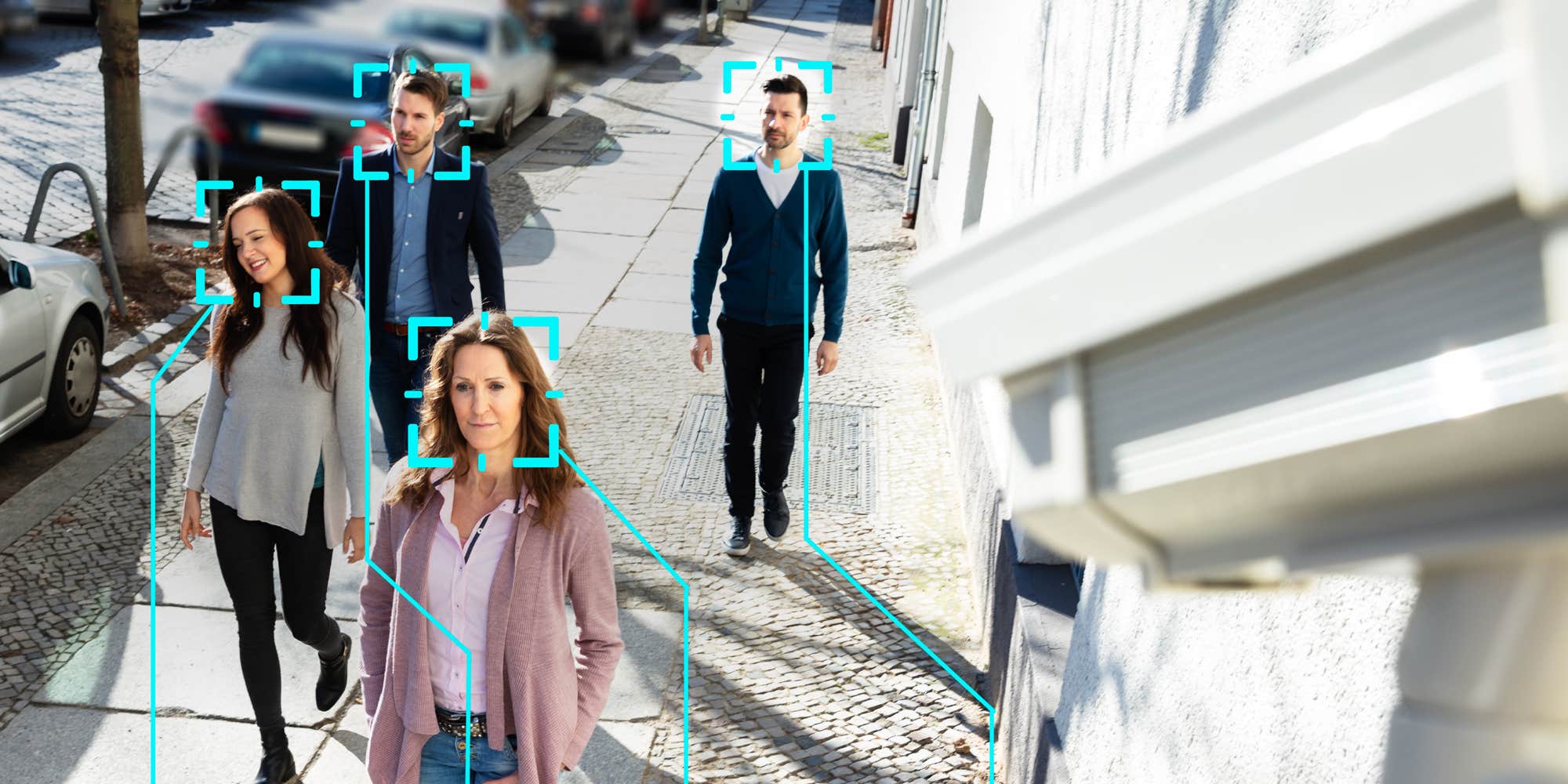

Last year, momentum swung toward reigning in facial recognition technology across cities in the U.S. But while concerns over the technology have been made clear, the country remains stuck in a scattered landscape of rules and regulations surrounding it.

Depending on where you are, the rules for facial recognition use can vary significantly, or not exist at all. In Illinois, customers must give businesses consent before they use any biometric information, and that law has been at the heart of numerous lawsuits. In Portland, Oregon, facial recognition is banned. And in New York, a proposed state law could impose similar restrictions to Illinois, but Amnesty International is pushing for stricter rules for New York City’s police.

Meanwhile, federal agencies, like the FBI and the TSA, are developing or using the technology—despite it being shown to have a racial bias—with only their internal rules to guide them and no single federal policy, regulation, or law governing the technology’s use. And local police, without city and state regulations, can do the same.

Interest in limiting or banning the technology, particularly government use, has also been fueled by the unrest throughout the nation that resulted in protests in response to the police killing of George Floyd in Minneapolis.

Essentially, during the past year, facial recognition and it’s implications for privacy invasion or an all-encompassing surveillance state have come to a head.

That swirl of movement surrounding the highly controversial technology even made it's way to Congress. Several bills were introduced last year that sought to reign in the technology.

The question now becomes: Will that momentum continue in 2021? Many advocacy groups are trying to make sure it does.

Recently, the lack of a single federal policy and the dangers the technology can pose to civil liberties prompted civic organizations and privacy groups, including the ACLU, to write the President Joe Biden's administration urging the president to ban federal agencies from using facial recognition, ban states from using federal money to buy the technology, and support a bill proposed by lawmakers last year that would place a federal moratorium on the technology that could only be lifted by Congress.

That bill, the "Facial Recognition and Biometric Technology Moratorium Act," could have new life now that the House and Senate are controlled by Democrats—a change in the potential chances of the bill passing that hasn't gone unnoticed by lawmakers who originally introduced it.

“A handful of cities, including Portland, Oregon, have already taken this step, but much work remains to be done to ensure that Americans in every corner of the country receive the same essential protections,” Sen. Jeff Merkley (D-Ore.) said in an emailed statement. “That’s why I will be working with my colleagues to again introduce legislation to halt the use of this technology until we have strong safeguards in place. We can’t allow facial recognition technology to continue to be used unchecked to create a surveillance state, especially as it disproportionately and wrongfully singles out Americans of color.”

Merkley sponsored two bills last year. One would have put a moratorium on the technology and the other, which he introduced with Sen. Bernie Sanders (I-Vt.), was modeled after the Illinois biometrics law.

He and others intend to reintroduce the law, especially now that both chambers of Congress are controlled by Democrats, but whether the law might be moratorium or a more limited approach, like Illinois’s, hasn’t been determined, according to Sen. Ed Markey’s (D-Mass.) office. And the timeline for its introduction also hasn’t been determined.

Facial recognition's patchwork of laws

In the absence of a federal policy, the regulations surrounding facial recognition are scattered and different across the U.S. and various industries.

The patchwork of city and state rules—and even the absence of rules—potentially exposes businesses to liability, especially businesses that operate nationwide, like social media and airlines which must tailor a policy to fit each location or create a one-size fits all approach to meet needs of the most stringent regulations. Meanwhile, law enforcement can continue to make the rules on how it uses the tools.

“If there isn’t a ban, then that means law enforcement is using it,” Kate Ruane, senior ACLU legislative attorney, said in an emailed statement. “As our letter indicates, the technology disproportionately misidentifies people of color, women, trans and nonbinary people, and other marginalized communities. These inaccuracies can lead to improper arrests and other negative interactions with law enforcement for communities that are already over-surveilled.”

The people and organizations that want to limit or ban the use of the technology note that it is better at identifying white people than people of color. In terms of law enforcement use, that has led to false arrests.

But they also want to make sure that businesses cannot profit from people’s personal data, like the shape of a face, or the retina pattern in the back of an eye.

“These inaccuracies can lead to improper arrests and other negative interactions with law enforcement for communities that are already over-surveilled,” Ruane said. “Moreover, these technologies are dangerous even when they work. They purport to be able to track us across space and time, everywhere we go, including to houses of worship, healthcare appointments, protests, and other constitutionally protected or private activities.”

Law enforcement has been using the technology to identify people accused of participating in the insurrection at the U.S. Capitol on Jan. 6. One prominent—yet highly criticized—facial recognition company used by police, Clearview AI, believes that using facial recognition for research to identify people after the fact is a legitimate use of the technology because it differs from continual surveillance of law-abiding people.

However, Clearview’s technology has been controversial because it scrapes public images on the internet, including those of social networks like Facebook, Instagram, and Twitter without users' knowledge or consent. Clearview has amassed a database of people's photos without their knowledge, and has even been sued by the ACLU.

The New York Times reported that searches on the company’s platform spiked 26% after the Capitol riot. Police departments in Miami, Florida, and Oxford, Alabama, have used the technology to identify suspects, according to the Times and the Wall Street Journal.

But privacy advocates have long warned about police use of the technology.

“At this time, the technology is far too concerning for law enforcement to be deploying in criminal investigations,” Ruane said. “That is why we have called on Congress to study the technology to determine whether and in what narrow circumstances its use might be appropriate and the standards the technology must meet before law enforcement could access it.”

In some instances, consumers agree to allow companies like Facebook, Apple, and airlines, to collect their biometric data, which makes it easier to tag photos, unlock phones, and check in for a flight.

But it is also being used by retailers to track customers through stores to prevent thefts and determine shopping behaviors for reward programs and targeted advertising.

Delta, which has recently expanded its facial recognition program in the United States, declined to answer specific questions about how it intends to manage the patchwork of regulations for its customers who might fly from a city where the technology is permitted to a city where it might be banned. A company spokesperson deferred comment to the airline advocacy organization Airlines for America.

The organization declined to answer specific questions, but in an emailed statement, a spokesperson said: “We support the federal authority that preempts a state-by-state regulation of the U.S. airline industry.”

In Illinois, face, eye, fingerprint, and other biometric data all falls under the law. If a business breaks the law, it can be sued. Facebook has already been ensnared in a multi-million settlement for using face scans to identify photos of its members in the state.

The ban in Oregon is considered to be among the most sweeping set of regulations of its kind throughout the nation. Government departments and agencies and public businesses, like stores and restaurants, will not be allowed to use the technology to identify people using their face. But it has exceptions for social media.

The ACLU said it has not formally received a response from the Biden administration about its letter. But the push for regulations and limitations on how the technology can be used by governments and businesses will continue.

“Americans across the country elected a new president and a new Senate majority in part because they want their government to heed their call for racial justice,” Merkley said. “That justice must include careful consideration of, and guardrails for, the use of facial recognition technology — which can easily be used ‘Big Brother’-style to violate Americans' privacy and has already brought new forms of racial discrimination and bias into law enforcement.”

The post Will the U.S. finally get its act together on facial recognition laws? appeared first on The Daily Dot.

0 Comments